Web Scraping with Scrapy

Web scraping serves as a vital instrument for various realms, including but not limited to data science and semantic analysis of websites. In this tutorial, we will utilize Scrapy for this purpose. While Scrapy offers its framework, we'll write a Python script and utilize the Scrapy shell for testing. Our objective involves counting the occurrence of each word in this blog and visualizing the word count using the Matplotlib library.

Using The Scrapy Shell

Before scripting, writing filters by specifying the HTML components we want to get is essential for the aim we want to achieve. The Scrapy Shell aids in this process. To initiate it, execute Scrapy Shell with the desired website as shown below.

scrapy shell 'https://extendedtutorials.blogspot.com/2023/11/chatgpt-in-your-computer-gpt4all-and.html'

It's essential to understand the website's structure for filtering our results. For this, I've selected my target element using the inspection tool.

Once we've comprehended the structure, we can test the filters in the shell. The shell begins with the response from our target website. We can assess our filters using this response, employing the "getall" function to obtain results. As shown in the code below, filtering involves element types and their class types united with a dot. Further filtration involves appending sub-elements with a space. Testing out filters is critical as the level of specificity required is determined through this process.

>>> response.css("div.jump-link a::attr(href)").getall()

['https://extendedtutorials.blogspot.com/2023/10/social-dilemmas.html',

'https://extendedtutorials.blogspot.com/2023/11/reverse-search-engines.html',

'https://extendedtutorials.blogspot.com/2023/11/text-to-image-generation-with-stable.html']

Having validated our filters, we're ready to write our script.

Writing The Python Script

To begin with, we need to import the necessary modules for our Python script. Scrapy is required for the web scraping, while matplotlib is required for plotting.

import re

import scrapy

from scrapy.crawler import CrawlerProcess

from scrapy.utils.project import get_project_settings

from scrapy.signalmanager import dispatcher

import matplotlib.pyplot as plt

Next, we can construct our Spider class. "start_urls" serve as entry points, while "name" variable is obligatory for Scrapy's framework, albeit not utilized here. The "parse" function constitutes the primary function for each starting URL.

class MySpider(scrapy.Spider):

name = "myspider"

start_urls = [

'https://extendedtutorials.blogspot.com/'

]

def parse(self, response):

for href in response.css("div.jump-link a::attr(href)").getall():

yield {"url": href}

Following the creation of our basic Spider class, we successfully obtained the URLs for our blog. Subsequently, we proceed to extract the blog post structure, with a focus on the body section and its associated labels, validated through the Scrapy shell.

After testing our filters, we can expand the Spider class. Note the modifications in the parse function below. We can now utilize the "scrapy.Request" class to send requests to each URL. The callback function specified in this class runs upon receiving a response from the website.

class MySpider(scrapy.Spider):

name = "myspider"

start_urls = [

'https://extendedtutorials.blogspot.com/'

]

def parse(self, response):

for href in response.css("div.jump-link a::attr(href)").getall():

yield scrapy.Request(href, callback=self.parse_data)

def parse_data(self, response):

labels = response.css("span.post-labels a::text").getall()

body = response.css("div.post-body *::text").getall()

words = list(map(lambda x: x.lower(), re.findall(r"[\w']+[\w'-_]*", " ".join(body))))

word_dict = {i:words.count(i) for i in words}

yield {

"labels": labels,

"dict": word_dict

}

With the Spider class scripted, we can consolidate the obtained data and display it on the console to validate our script. We can invoke Scrapy within our code to avoid initializing a Scrapy project using "CrawlerProcess", which initiates a scraping process in our script. "CrawlerProcess"s start command awaits the completion of crawling. Besides, we can link the output function via the Scrapy dispatcher.

results = []

def crawler_results(signal, sender, item, response, spider):

results.append(item)

if __name__ == '__main__':

dispatcher.connect(crawler_results, signal=scrapy.signals.item_scraped)

process = CrawlerProcess(get_project_settings())

process.crawl(MySpider)

process.start()

print(result)

After script completion, let's verify the output.

It seems that we successfully retrieved the word counts from our blog posts. However, printing the results is inadequate for analysis. Let's generate a plot using Matplotlib for a visual representation.

results = []

def crawler_results(signal, sender, item, response, spider):

results.append(item)

if __name__ == '__main__':

dispatcher.connect(crawler_results, signal=scrapy.signals.item_scraped)

process = CrawlerProcess(get_project_settings())

process.crawl(MySpider)

process.start()

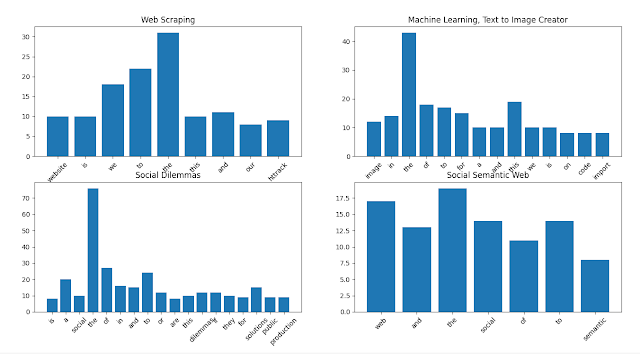

fig, ax = plt.subplots(2, 2)

for i in range(4):

to_plot = {k:v for (k,v) in results[i]["dict"].items() if v > 7}

ax[i//2, i%2].bar(list(to_plot.keys()), list(to_plot.values()))

ax[i//2, i%2].set_title(", ".join(results[i]["labels"]))

ax[i//2, i%2].set_xticklabels(to_plot.keys(), rotation=45)

plt.show()

Upon running the modified script, we obtained four random plots from the scraping process, categorized by their labels.

Conclusion

In conclusion, web scraping serves as a crucial tool for analysis and ontology creation. While alternative tools exist, Scrapy simplifies these processes by providing a comprehensive framework that streamlines data extraction and analysis. Its robust capabilities empower users to efficiently navigate and extract valuable information from diverse websites.

Comments

Post a Comment